Lecture 1.4

Logistics

- Github Classroom

- Deadline for group project proposal Oct 11

AI Traditions & History

- Be aware of fields that AI inherits from

- When the history of “AI” starts depends on what you call AI

- See some historical examples of attempts at implementing AI

- See some historical examples of attempts at measuring AI

History

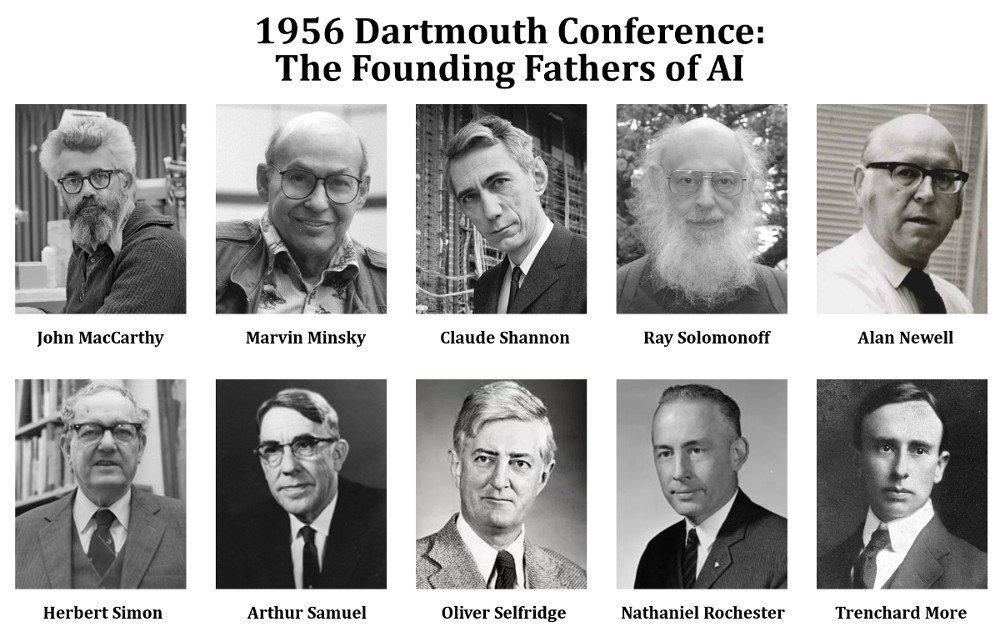

Dartmouth Summer Conference

- Held in 1956, a seminal event in the history of artificial intelligence

- Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon

- Brought together leading researchers to explore the concept of “artificial intelligence”

- Lasted for eight weeks

- Focused on various aspects of machine intelligence, including natural language processing, neural networks, and problem-solving

- Although immediate outcomes were limited, widely regarded as the birthplace of AI as a distinct field of study

Pre-History

- Bayes’ theorem (1763) by Thomas Bayes, laying the foundation for probabilistic reasoning

- Method of least squares (1805) by Adrien-Marie Legendre, crucial for linear regression

- Boolean algebra (1854) by George Boole, fundamental for logical reasoning in computers

- Formal logic systems by Gottlob Frege and Bertrand Russell (late 19th/early 20th century)

- Turing machine concept (1936) by Alan Turing, establishing the basis for computational theory

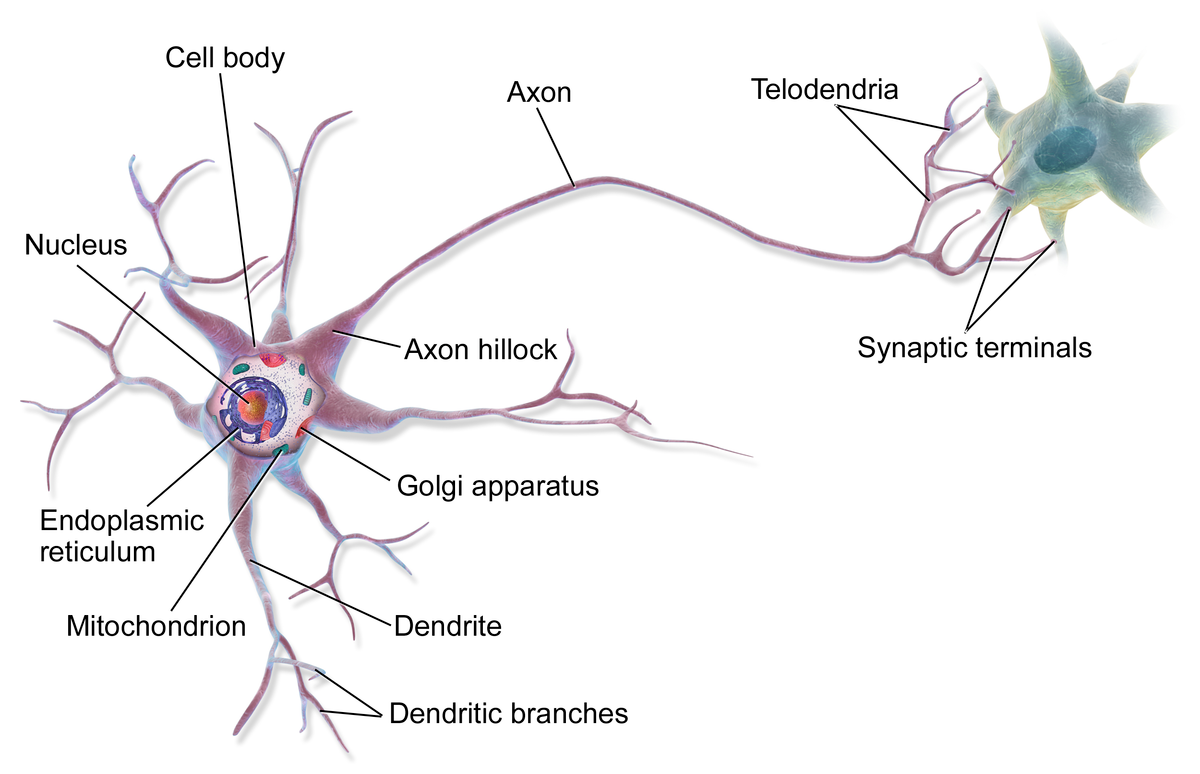

- McCulloch-Pitts neuron model (1943), an early artificial neuron concept

- Cybernetics (1948) by Norbert Wiener, exploring control and communication in machines and animals

Traditions

Symbolic AI

- Focuses on high-level symbolic representations of problems

- Uses logical reasoning and rule-based systems

- Emphasizes explicit knowledge representation

- Relies on symbol manipulation for problem-solving

- Examples include expert systems, logic programming, and modern AI planning systems like STRIPS (Stanford Research Institute Problem Solver)

Historical note: Symbolic AI dominated the field from the 1950s to the 1980s, often referred to as the “Good Old-Fashioned AI” (GOFAI) era.

Expert Systems

- Rule-based systems designed to emulate human expert decision-making

- Utilize knowledge bases and inference engines

- Effective in narrow, well-defined domains

- Examples include medical diagnosis systems, financial planning tools, and modern applications like IBM Watson for healthcare

- Challenges include knowledge acquisition and maintenance

Historical note: Expert systems gained prominence in the 1970s and 1980s, with significant commercial applications. Notable event: The creation of MYCIN in 1972, one of the first expert systems for diagnosing blood infections.

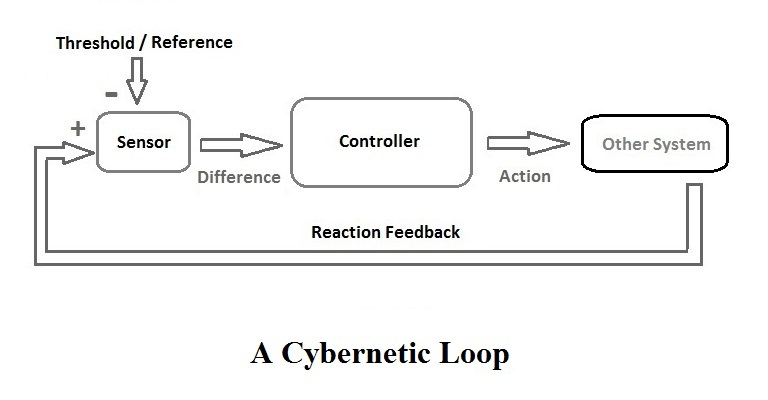

Cybernetics & Embodied AI

- Studies control and communication in animals and machines

- Focuses on feedback loops and self-regulating systems

- Interdisciplinary field combining engineering, biology, and cognitive science

- Influential in early AI development and robotics

- Key concepts: homeostasis, feedback mechanisms, and system behavior

- Modern applications include autonomous vehicles and smart home systems

- Intelligence as a property of agents embodied in environments

- Modern examples include Boston Dynamics’ robots and social robots like Sophia

Historical note: Cybernetics emerged as a distinct field in the 1940s, influencing early AI research. Notable event: The publication of Norbert Wiener’s book “Cybernetics” in 1948, which laid the foundation for the field.

Historical note: Embodied AI gained traction in the 1980s and 1990s as a reaction to traditional symbolic AI approaches. Notable event: Rodney Brooks’ development of behavior-based robotics in the mid-1980s, exemplified by the subsumption architecture.

Connectionism

- Intelligence as an emergent property of a large number of connected units

- Modern examples include deep learning models like GPT-3 and AlphaGo

Emergence occurs when a complex entity has properties or behaviors that its parts do not have on their own, and emerge only when they interact in a wider whole.

Historical note: Connectionism experienced a resurgence in the 1980s with the development of parallel distributed processing models. Notable event: The publication of “Parallel Distributed Processing” by David Rumelhart and James McClelland in 1986, which popularized neural network approaches.

Cognitive Modeling

Model the behavior of the human mind as a computer program

Newell & Simon’s General Problem Solver

Definitions of Intelligence

Logic & Rationality

Find the “Laws of Thought”, and build AI that follows them.

-

Aristotle’s syllogisms

Greek Philosopher Aristotle’s syllogisms are a cornerstone of classical logic and deductive reasoning. They consist of three parts:

-

Major premise: A general statement

-

Minor premise: A specific instance or example

-

Conclusion: The logical result derived from the premises

-

Example:

All cats have whiskers. (Major premise) Fluffy is a cat. (Minor premise) Therefore, Fluffy has whiskers. (Conclusion)

-

Syllogisms follow specific forms and rules, such as:

- The middle term must be distributed in at least one premise

- The conclusion must not have a term distributed unless it is distributed in a premise

- There must be exactly three terms in a syllogism

Aristotle identified 256 possible forms of syllogisms, of which he considered 24 to be valid.

-

Limitations of syllogisms:

- May oversimplify complex real-world situations

- Rely on the accuracy of premises, which may be flawed

- Can lead to false conclusions if premises are incorrect or improperly structured

-

Bounded Rationality

- Herbert Simon

- Recognizes limitations of human cognition and decision-making

- Acknowledges constraints on information, time, and cognitive capacity

- Contrasts with “perfect” or “unbounded” rationality assumptions

- Implies satisficing behavior rather than optimizing

- Explains why people often make “good enough” choices rather than optimal ones

-

-

Logical Positivism

- Rudolf Carnap

- Emphasizes empirical verification and logical analysis

- Seeks to eliminate metaphysical statements from scientific discourse

- Focuses on the logical structure of scientific theories

- Influenced early approaches to AI and cognitive science

Parity with Human Performance

-

Alan Turing

- Proposed the Turing Test as a measure of machine intelligence

- Emphasized behavioral equivalence between humans and machines

- Influenced development of natural language processing and chatbots

Thought Processes vs. Behavior

What dictates intelligence? What should we measure?

-

Proponents of Measuring Thought Processes:

-

Noam Chomsky

- Emphasized the importance of studying internal mental structures

- Criticized behaviorism for neglecting cognitive processes

- Developed theories of generative grammar and universal language acquisition

-

Jerry Fodor

- Proposed the Language of Thought hypothesis

- Thinking occurs in a language-like cognitive process in the mind

- Argued for the existence of a “mentalese” or internal cognitive representational system

- Emphasized the importance of studying mental representations and computational processes

- Proposed the Language of Thought hypothesis

-

Allen Newell and Herbert A. Simon

- Developed the Physical Symbol System Hypothesis

- Proposed that intelligent action is produced by manipulating symbols

- Emphasized the importance of studying problem-solving strategies and cognitive architectures

-

-

Proponents of Measuring Behavior:

- B.F. Skinner

- Pioneered the behaviorist approach to psychology

- Emphasized the importance of observable behavior over internal mental states

- Developed theories of operant conditioning and reinforcement learning

- Edward Thorndike

- Formulated the Law of Effect, linking behavior to its consequences

- Developed early theories of animal intelligence based on observable behavior

- Influenced the development of behaviorism and reinforcement learning in AI

- John Watson

- Founder of behaviorism

- Argued that psychology should focus on observable behavior rather than internal mental processes

- Emphasized the role of environment in shaping behavior

- B.F. Skinner

-

Behavior is easy to fool

- ELIZA system demonstrated how simple pattern-matching could create the illusion of understanding

- Showed that seemingly intelligent behavior can be produced from a system that seems like it is not intelligent

-

Solipsism

The philosophical idea that only one’s own mind is sure to exist and that knowledge of anything outside one’s own mind is uncertain.

”I think, therefore I am… ” (1637) - René Descartes

”… not sure about anything else” - solipsism

Measures of Intelligence

Turing Test

Evaluates a machine’s ability to exhibit intelligent behavior indistinguishable from a human Involves a human evaluator engaging in natural language conversations with both a human and a machine Success is determined if the evaluator cannot reliably distinguish the machine from the human

Historical note: Proposed by Alan Turing in 1950 in his paper “Computing Machinery and Intelligence”

IQ Tests

Standardized tests designed to measure cognitive abilities and potential Typically assess skills such as logical reasoning, problem-solving, and pattern recognition Results are often expressed as an Intelligence Quotient (IQ) score

Historical note: The first modern IQ test was developed by Alfred Binet and Theodore Simon in 1905 to identify French schoolchildren who needed extra help in their studies

Emotional Intelligence (EQ)

Measures the ability to recognize, understand, and manage one’s own emotions and those of others Includes skills like empathy, self-awareness, and social competence Often considered complementary to traditional cognitive intelligence measures

Multiple Intelligences Theory

Proposed by Howard Gardner, suggesting that intelligence is not a single general ability Identifies distinct types of intelligence, including linguistic, logical-mathematical, spatial, musical, bodily-kinesthetic, interpersonal, intrapersonal, and naturalistic Emphasizes the diverse ways individuals can be intelligent

Practical Intelligence

Focuses on the ability to solve real-world problems and adapt to everyday situations Involves skills like common sense, street smarts, and tacit knowledge Often contrasted with academic or theoretical intelligence

Fluid and Crystallized Intelligence

Fluid intelligence: The ability to solve novel problems, reason abstractly, and adapt to new situations Crystallized intelligence: Knowledge and skills acquired through experience and education Both considered important components of overall cognitive ability

Adaptive Behavior Scales

Assess an individual’s ability to function independently in everyday life Measure skills such as communication, self-care, social skills, and practical life skills Often used in conjunction with IQ tests, especially for individuals with developmental disabilities

Cognitive Ability Tests

Evaluate various mental abilities such as reasoning, perception, memory, verbal and numerical ability, and problem-solving Often used in educational and occupational settings for placement or selection purposes Can be general or designed to assess specific cognitive domains

Achievement Tests

Measure knowledge or skills in specific academic areas or subjects Often used in educational settings to assess learning progress and outcomes Examples include standardized tests like SAT, ACT, or subject-specific exams

Further Reading

Deep Learning History (Schmidhuber)

Search

file:///Users/gabe/notes/Lecturing/classes/ECS170/slides/1_search_slides.pdf